Studio

Spatial Transformation

Creator Tools

Projects

Jobs

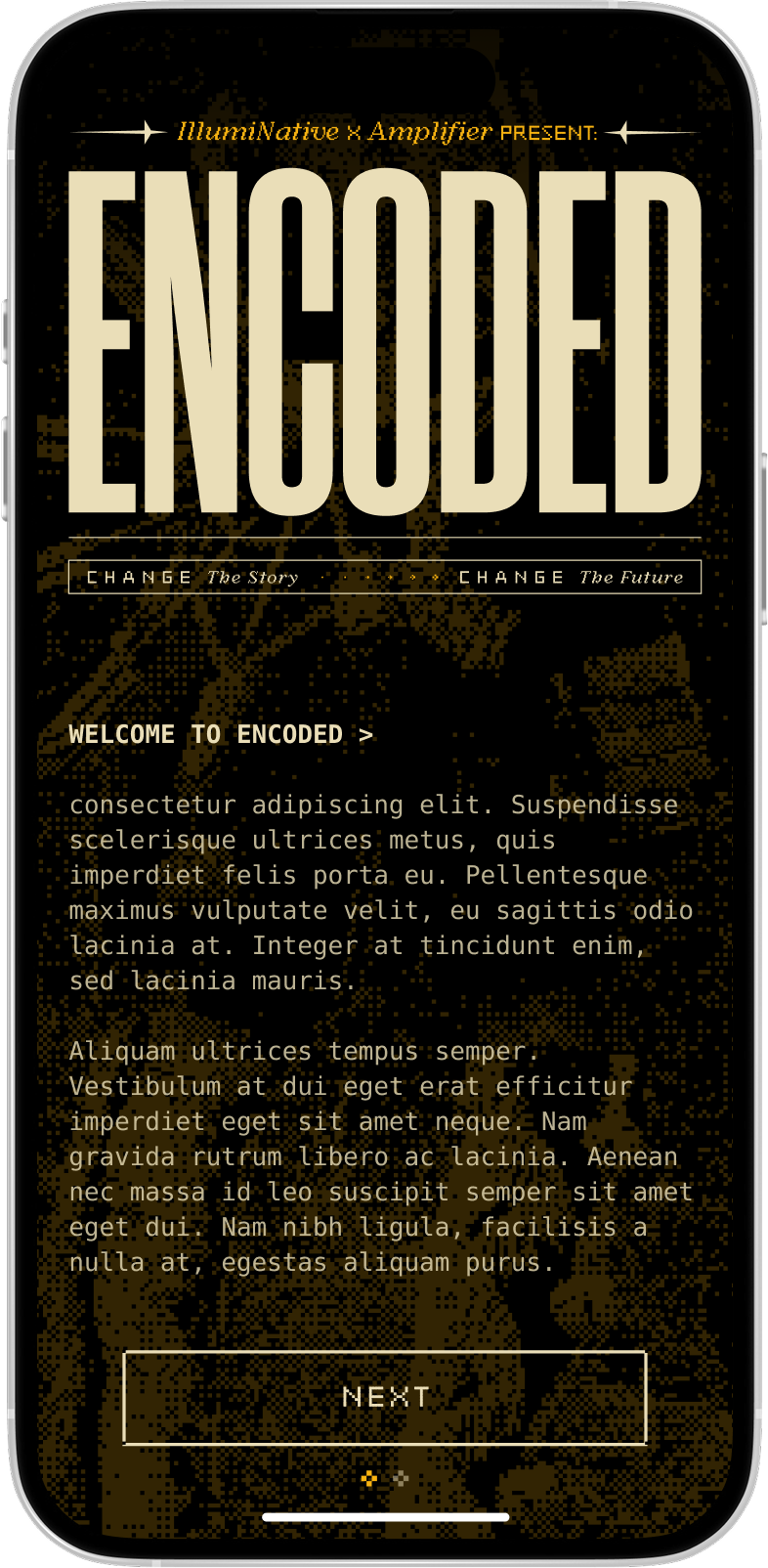

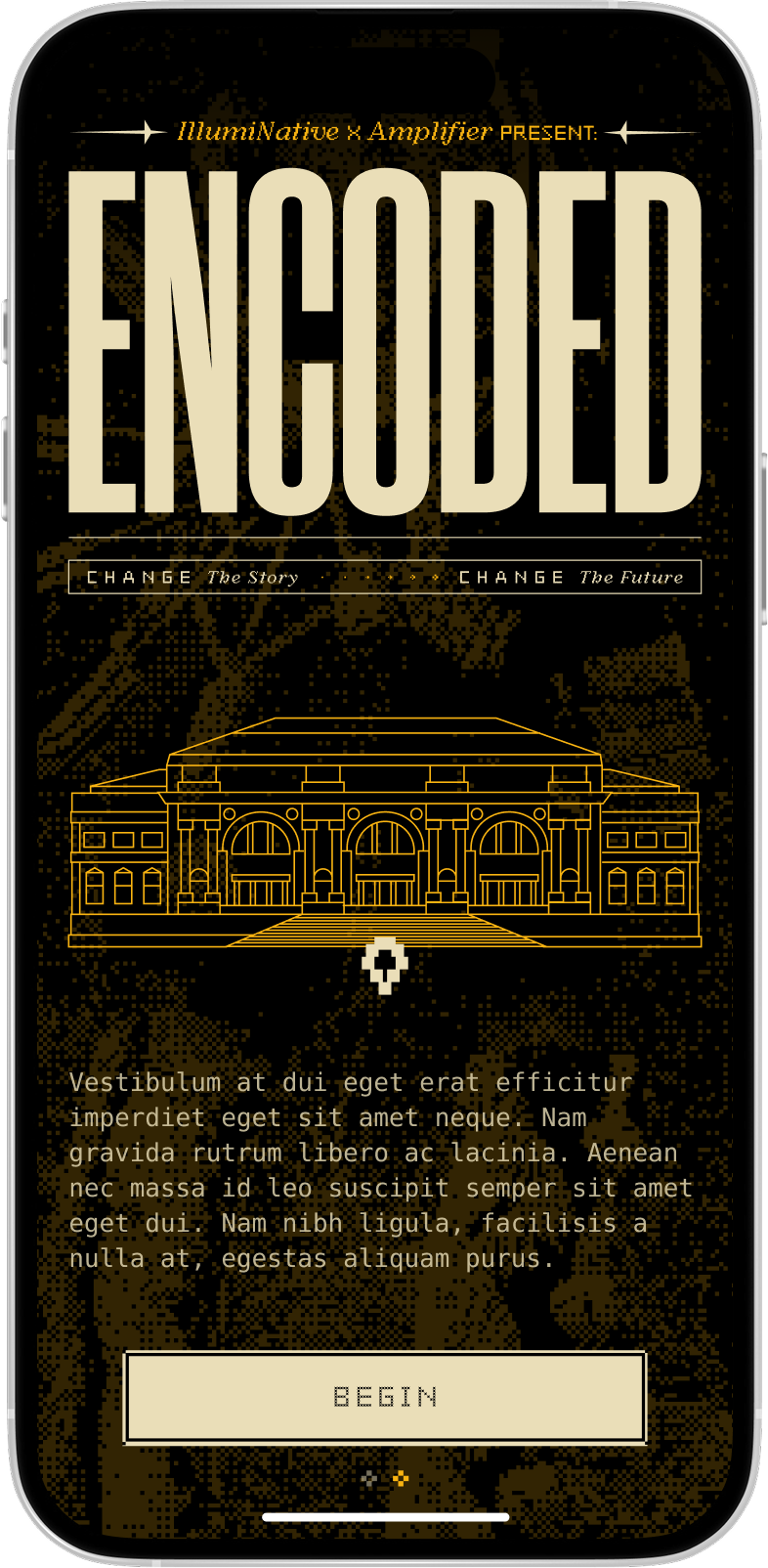

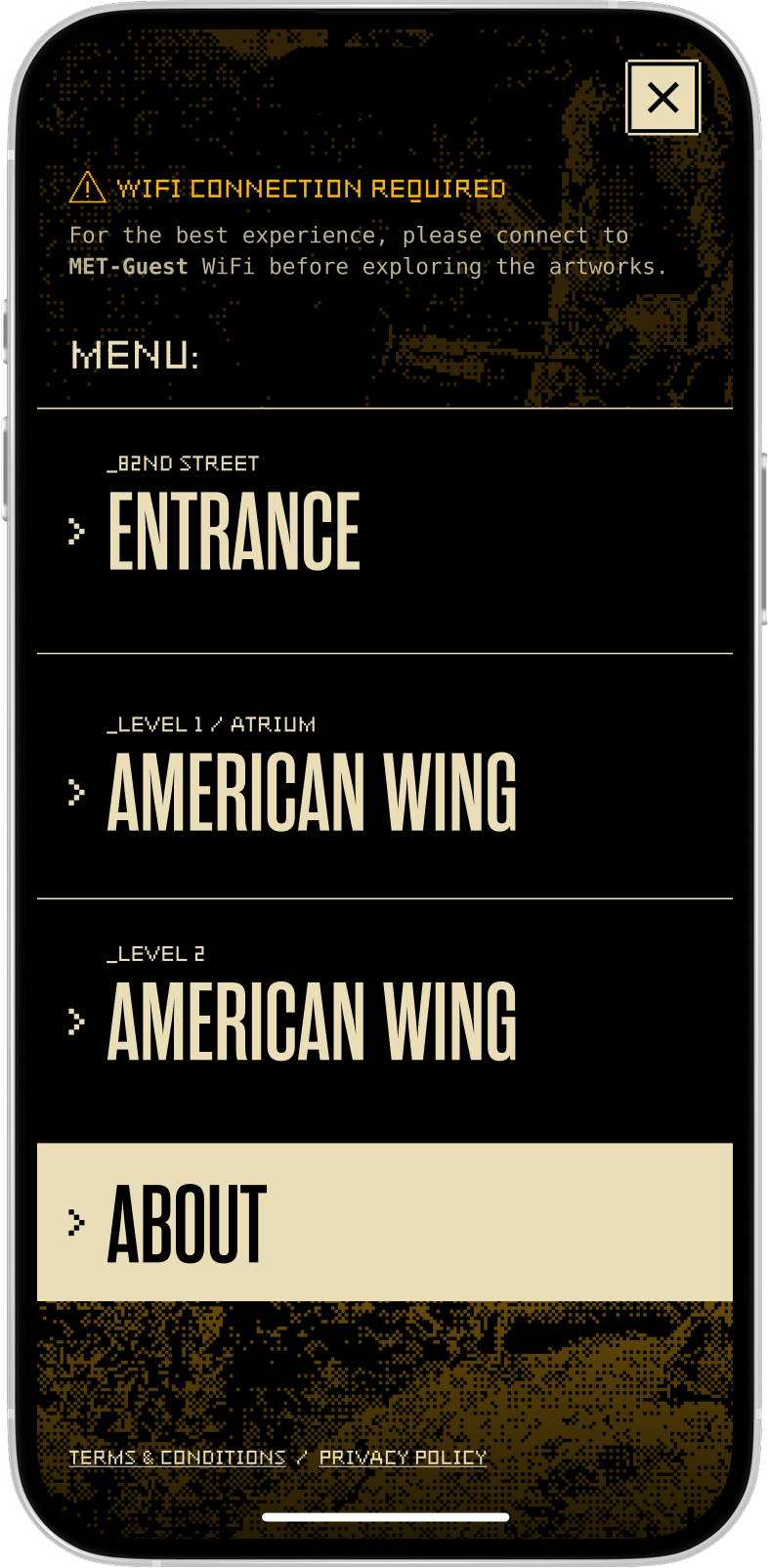

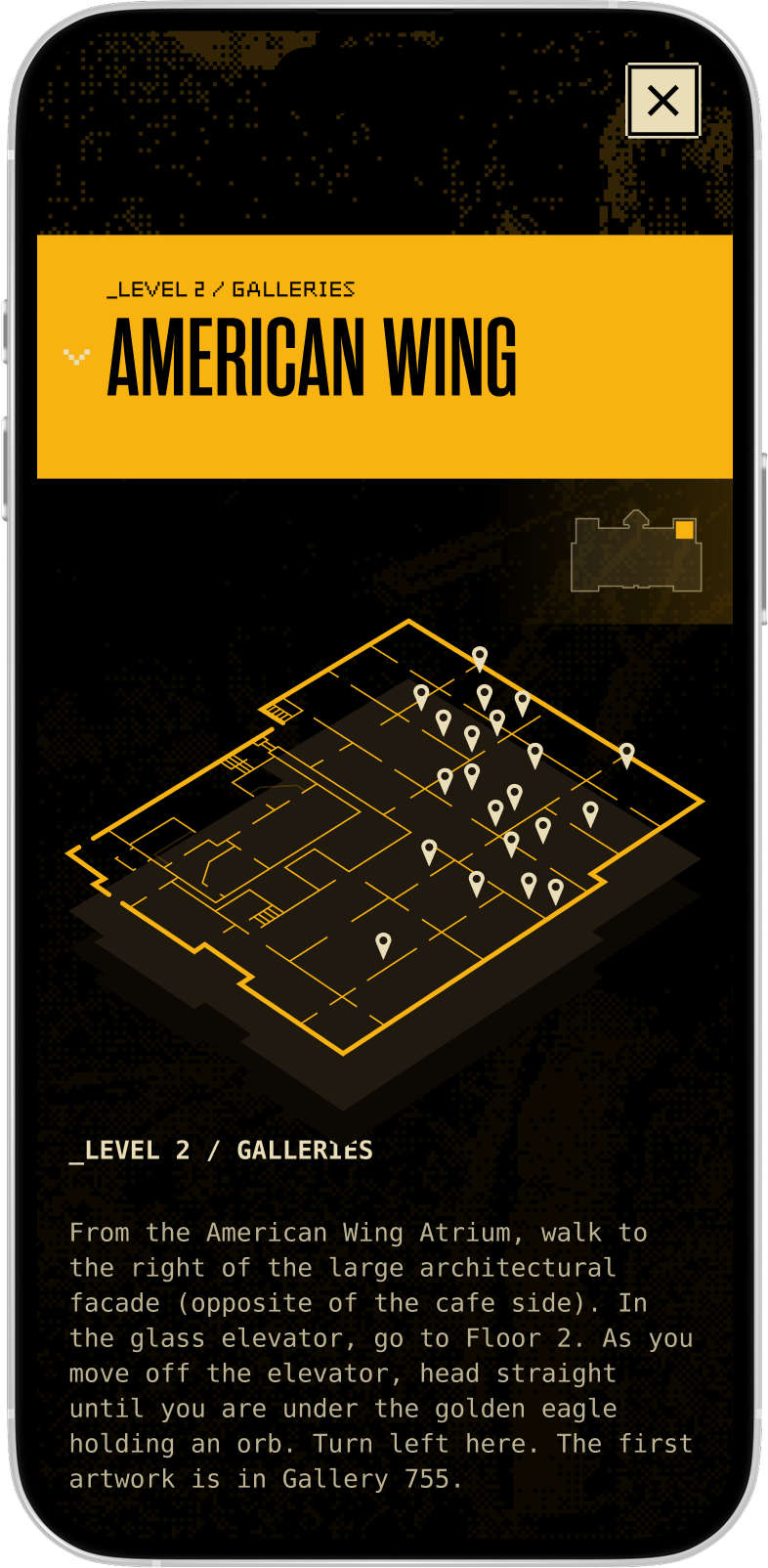

ENCODED is an Augmented Reality Intervention envisioned by Amplifier with technical execution created in collaboration between EyeJack and Amplifier. The experience launched on Indigenous Peoples’ Day 2025, taking over The Met’s American Wings. Seventeen Indigenous artists from across Turtle Island use AR and sound to reclaim space, expand narratives, and activate the museum in new ways. The experience guides visitors through 25 artworks, bringing each intervention to life when users point their camera at paintings, sculptures, or immersive works.